Enterprise-Grade NVIDIA GPU Infrastructure

Our state-of-the-art data centers are equipped with the latest NVIDIA GPU hardware, providing unparalleled compute power for your most demanding AI and HPC workloads.

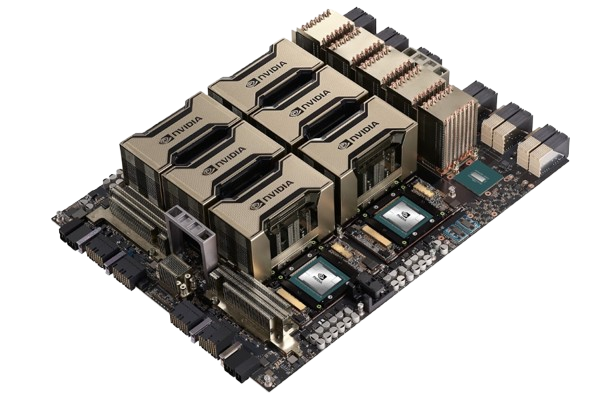

NVIDIA A100 Tensor Core GPU

The industry-leading GPU for accelerating AI, data analytics, and high-performance computing workloads. The A100 delivers unprecedented acceleration at every scale.

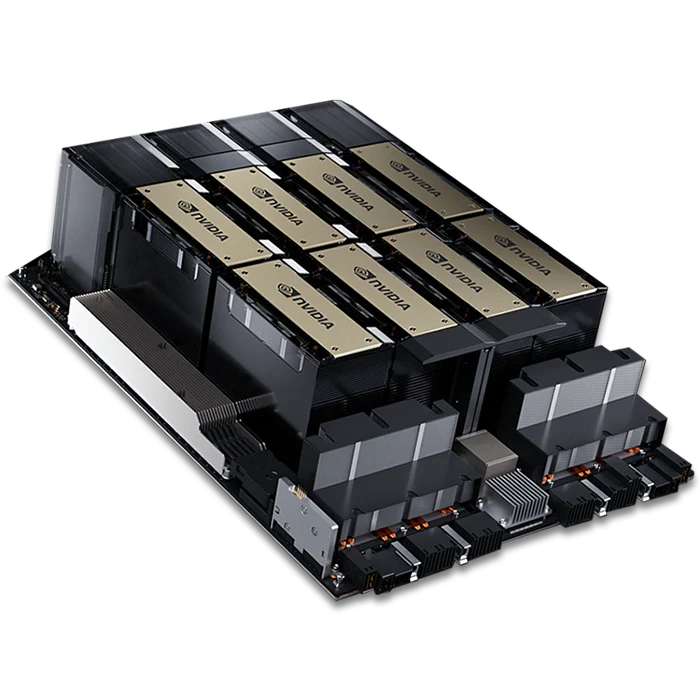

NVIDIA H100 Tensor Core GPU

The latest flagship GPU based on the Hopper architecture, delivering extraordinary performance for AI, HPC, and data analytics applications with groundbreaking technologies.

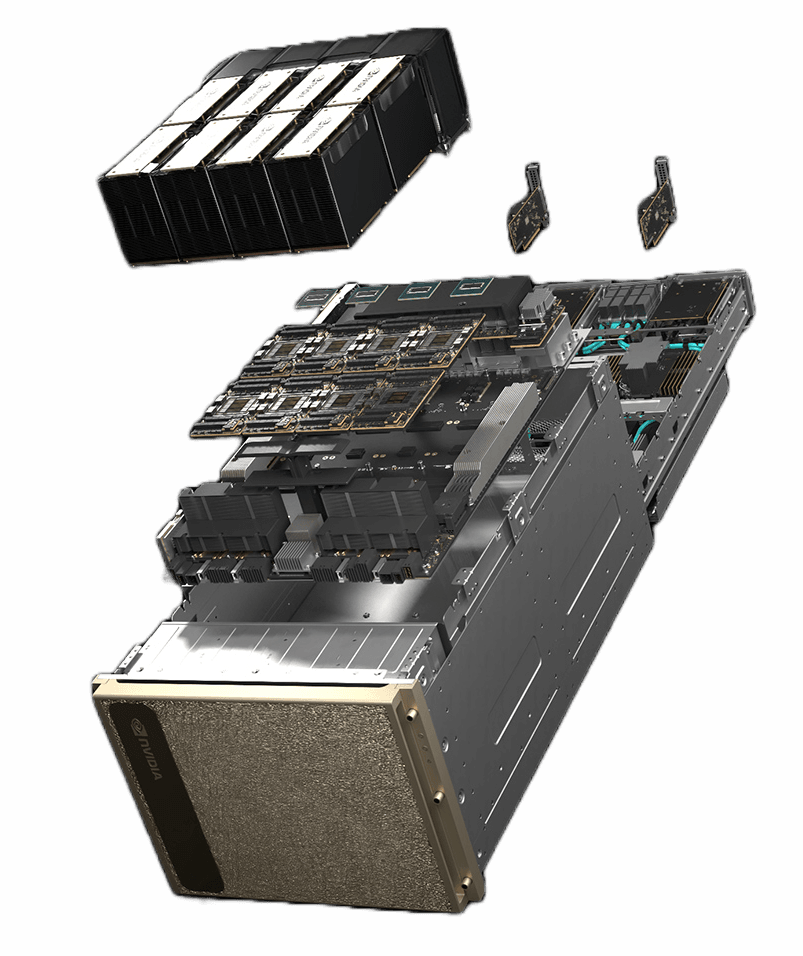

NVIDIA DGX Systems

Our data centers are equipped with NVIDIA DGX systems, the universal AI infrastructure designed to accelerate complex workloads. These purpose-built systems combine multiple GPUs with high-speed networking and optimized software.

Scalable Architecture

Leverage multiple GPUs with NVLink interconnect for massive parallel computing capabilities

NVIDIA AI Enterprise Suite

Pre-optimized software stack with frameworks, tools, and libraries for AI development

High-Speed Networking

InfiniBand networking for low-latency, high-bandwidth connections between systems

| GPU Model | VRAM | Tensor Cores | FP16 Performance | Availability |

|---|---|---|---|---|

| NVIDIA RTX A4000 | 16GB | 40 | 19.2 TFLOPS | On-demand |

| NVIDIA A10 | 24GB | 72 | 31.2 TFLOPS | On-demand |

| NVIDIA A100 (40GB) | 40GB | 432 | 78 TFLOPS | Reserved |

| NVIDIA A100 (80GB) | 80GB | 432 | 78 TFLOPS | Reserved |

| NVIDIA H100 (80GB) | 80GB | 528 | 989 TFLOPS | Enterprise Only |