Solutions for Tomorrow's Most Demanding Workloads

Our GPU-accelerated infrastructure is optimized for the most computationally intensive tasks across various industries, delivering unprecedented performance and efficiency.

Stock Investment Analysis

Revolutionize financial services with our AI-powered solutions led by the groundbreaking GULU Stock AI—Malaysia's first dedicated AI platform for Bursa Malaysia stock analysis, developed by Ts. Dr. Leong Yee Rock. Our advanced GPU infrastructure processes vast financial datasets at unprecedented speeds, providing institutions with real-time insights and predictive capabilities.

- GULU Stock AI: Transformer-based model specifically fine-tuned for Malaysian equities analysis with contextual understanding of local market dynamics

- Proprietary sentiment analysis algorithms that process financial news in multiple languages including Bahasa Malaysia, English, and Chinese

- Advanced pattern recognition systems that identify emerging market trends before they become apparent to traditional analysis

- Enterprise-grade deployment infrastructure with model versioning, A/B testing capabilities, and secured API endpoints for production environments

Enterprise-Grade NVIDIA GPU Infrastructure

Our state-of-the-art data centers are equipped with the latest NVIDIA GPU hardware, providing unparalleled compute power for your most demanding AI and HPC workloads.

NVIDIA A100 Tensor Core GPU

The industry-leading GPU for accelerating AI, data analytics, and high-performance computing workloads. The A100 delivers unprecedented acceleration at every scale.

NVIDIA H100 Tensor Core GPU

The latest flagship GPU based on the Hopper architecture, delivering extraordinary performance for AI, HPC, and data analytics applications with groundbreaking technologies.

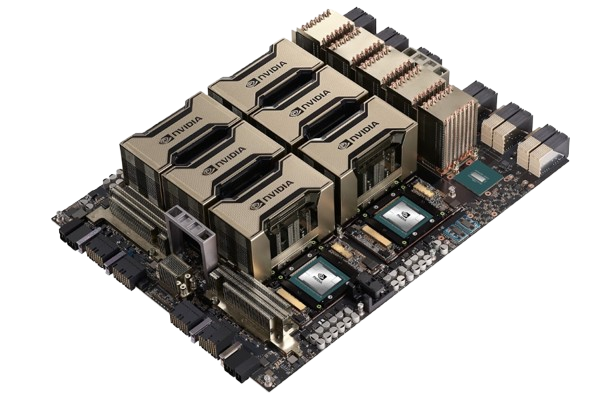

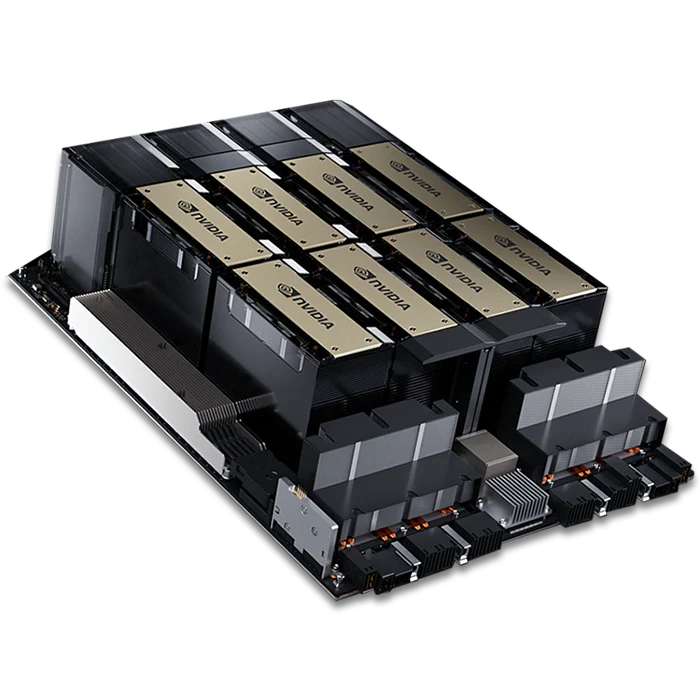

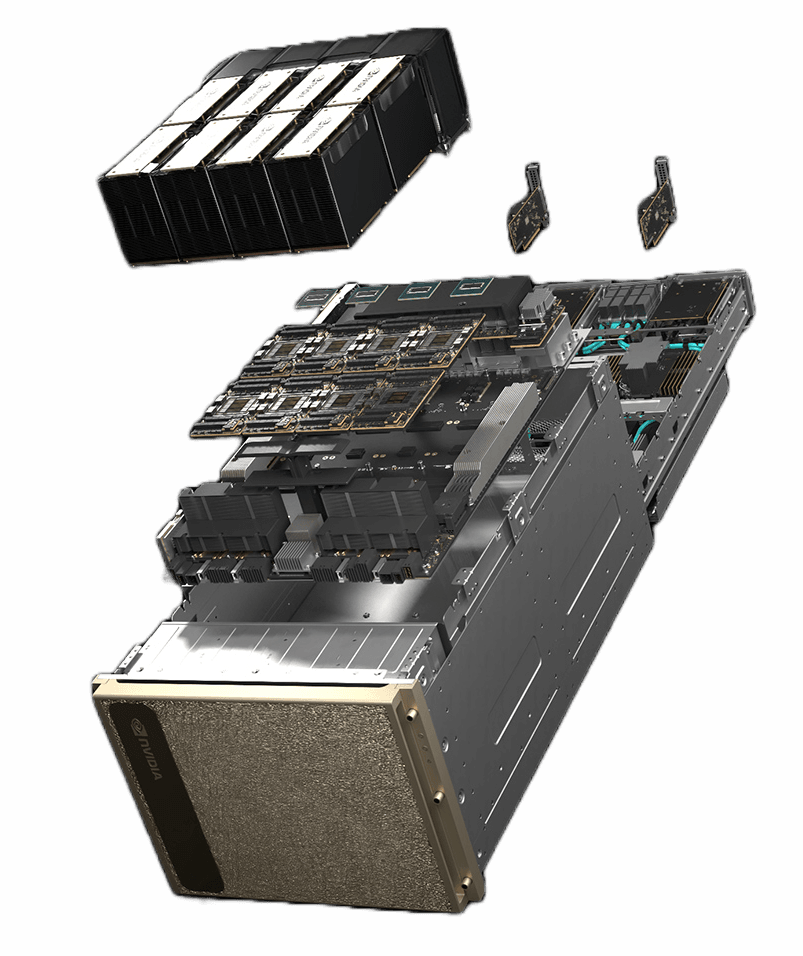

NVIDIA DGX Systems

Our data centers are equipped with NVIDIA DGX systems, the universal AI infrastructure designed to accelerate complex workloads. These purpose-built systems combine multiple GPUs with high-speed networking and optimized software.

- Scalable Architecture Leverage multiple GPUs with NVLink interconnect for massive parallel computing capabilities

- NVIDIA AI Enterprise Suite Pre-optimized software stack with frameworks, tools, and libraries for AI development

- High-Speed Networking InfiniBand networking for low-latency, high-bandwidth connections between systems

Available GPU Models

| GPU Model | VRAM | Tensor Cores | FP16 Performance | Availability |

|---|---|---|---|---|

| NVIDIA RTX A4000 | 16GB | 40 | 19.2 TFLOPS | On-demand |

| NVIDIA A10 | 24GB | 72 | 31.2 TFLOPS | On-demand |

| NVIDIA A100 (40GB) | 40GB | 432 | 78 TFLOPS | Reserved |

| NVIDIA A100 (80GB) | 80GB | 432 | 78 TFLOPS | Reserved |

| NVIDIA H100 (80GB) | 80GB | 528 | 989 TFLOPS | Enterprise Only |

Advanced Language Model Infrastructure

Our GPU platform is optimized for deploying, fine-tuning, and running state-of-the-art language models, enabling you to build sophisticated AI applications with ease.

LLaMA

Deploy and fine-tune Meta's powerful open-source large language model for a wide range of tasks, from conversational AI to code generation.

DeepSeek

Leverage DeepSeek's state-of-the-art models with exceptional capabilities in reasoning, code generation, and multilingual tasks.

Stable LM

Utilize Stability AI's lightweight yet powerful language models, optimized for various applications with efficient resource usage.

Phi

Harness Microsoft's small but mighty Phi models, delivering impressive performance for reasoning and code tasks despite their compact size.

Qwen

Deploy Alibaba's versatile Qwen models that excel in both English and Chinese language tasks with strong reasoning capabilities and multilingual support.

Ollama Integration

Seamlessly deploy and run a wide range of open-source models with Ollama's simplified management system for easy model switching and deployment.

Comprehensive LLM Development Infrastructure

Our GPU platform is specifically optimized for training, fine-tuning, and deploying state-of-the-art language models, with dedicated support for both open-source and proprietary models.

Model Hosting

Deploy your fine-tuned models with our optimized inference infrastructure. Get low-latency API access with elastic scaling capabilities.

Fine-Tuning

Run efficient parameter-efficient fine-tuning (PEFT) on open models with our LoRA, QLoRA, and Adapter-optimized infrastructure.

Dedicated Instances

Get exclusive access to high-performance GPU resources with dedicated machine instances for maximum throughput and performance.

Monitoring & Observability

Advanced monitoring tools for tracking inference costs, latency metrics, usage patterns, and model performance analytics.

Auto-Scaling

Dynamic resource allocation that automatically scales with your traffic demands, ensuring optimal performance while minimizing costs.

Custom Model Support

Run any open-source or custom model with our flexible runtime environment that supports ONNX, TensorRT, vLLM and other accelerators.

Advanced LLM Training Capabilities

Our purpose-built GPU infrastructure delivers exceptional performance for training and fine-tuning language models of all sizes, from foundation models to specialized domain adaptations.

Optimized LLM Training Infrastructure

Leverage our state-of-the-art GPU clusters specifically configured for distributed training of large language models. With high-bandwidth InfiniBand interconnects and specialized memory optimization, we accelerate your model development cycle from weeks to days.

- Distributed Training Multi-node, multi-GPU training with DeepSpeed, FSDP, and Megatron-LM support for models with billions of parameters

- Fine-tuning Optimization Pre-configured environments for LoRA, QLoRA, and full fine-tuning with automated parameter-efficient techniques

- Custom Dataset Processing Data pipeline optimization with automated data cleaning, tokenization, and augmentation services

- Model Evaluation Suite Comprehensive benchmarking tools to evaluate model performance across multiple metrics and tasks

Specialized LLM Workloads

Our infrastructure supports the complete LLM development lifecycle:

Framework Support

Pre-configured environments for all major LLM frameworks:

Our cutting-edge research and innovations in AI technology have been recognized by Malaysia's premier technology institution, highlighting our commitment to advancing AI capabilities in Southeast Asia.

We drive the adoption of Generative AI and LLMs like OpenAI ChatGPT, Anthropic Claude, and Google Gemini, transforming industries through language understanding, content creation, and automation. With cloud access, even small businesses can benefit—making responsible use and policy support essential.

Our Distinguished Consultants

VYROX AI is guided by world-class experts who bring decades of experience in artificial intelligence, computational science, and advanced technology development.

Ts. Dr. Leong Yee Rock

Founder & Chief AI Specialist

Ts. Dr. Leong Yee Rock is a renowned expert in artificial intelligence and Internet of Things technology. With a Ph.D. in Internet of Things from the University of Malaya, he has established himself as a visionary leader in Malaysia's technology landscape and Southeast Asia's AI ecosystem.

As the founder of VYROX International Sdn Bhd, Dr. Leong has developed cutting-edge AI solutions that are transforming industries across Malaysia and beyond. His pioneering research in AI consciousness level definitions has created a framework for categorizing and understanding machine consciousness, bridging the gap between technical implementation and philosophical understanding of AI systems.

Areas of Expertise

Notable Achievements

-

GULU Stock AI Development

Created Malaysia's first AI-powered stock analysis platform for Bursa Malaysia (KLSE), which leverages advanced transformer-based language models specifically fine-tuned for Malaysian equities analysis.

-

AI Consciousness Framework

Developed a groundbreaking framework for understanding and classifying levels of artificial consciousness, which is being used to guide ethical AI development and deployment across various sectors.

Transforming Industries with Advanced AI

Our GPU-accelerated infrastructure and expertise are helping organizations across Southeast Asia harness the power of artificial intelligence to solve complex problems, create new opportunities, and drive innovation.

View GPU Hosting PlansPremium GPU Infrastructure Solutions

Our enterprise-grade GPU infrastructure delivers superior performance with up to 2.8x better throughput than generic cloud providers, while offering comprehensive support and zero hidden costs. Organizations deploying our solutions typically achieve ROI within 90 days through enhanced productivity and reduced total ownership costs.

Choose Your Performance Tier

*Committed pricing requires 12-month term with guaranteed resource availability

Complete AI Infrastructure Solutions

Enterprise Inference

- 4x A10 GPUs (auto-scaling)

- Elastic inference API endpoints

- Request caching & load balancing

- Up to 1.5M inference requests/day

- 99.9% uptime SLA

AI Research Suite

- 2x A100 (80GB) dedicated GPUs

- Managed JupyterLab environment

- 2TB NVMe storage with snapshots

- Pre-configured LLM frameworks

- Model versioning & experiment tracking

Premium Enterprise Benefits

True Per-Second Billing

Unlike other providers that round up to the nearest hour or have hidden minimums, we bill exactly for what you use down to the second with no minimum usage requirements, saving you up to 42% compared to providers with per-hour billing.

Enterprise-Grade Security

Our infrastructure is ISO 27001:2022 certified with strict data protection protocols, hardware-level tenant isolation, and optional data sovereignty features to meet the most demanding compliance requirements.

Zero Data Transfer Fees

We never charge for ingress, egress, or API traffic between services—saving you up to 40% compared to hyperscalers. Transfer terabytes of data and serve high-traffic models with absolutely no bandwidth charges or hidden fees.

Dedicated AI Engineering Team

Get direct access to our team of experienced ML engineers with expertise in optimizing large-scale models. We provide architecture guidance, performance tuning, and ongoing support that helps reduce training times by up to 67%.

Why Choose VYROX AI?

See how our enterprise-grade AI infrastructure compares to major cloud GPU providers

Frequently Asked Questions

Why are your prices higher than some providers?

Our premium pricing reflects our AI-optimized infrastructure, which delivers up to 2.8x better performance than generic cloud providers. With our specialized optimizations, you'll achieve better results faster—ultimately saving money through reduced training times and more efficient resource usage.

Are there any hidden costs?

None. Unlike most providers, we never charge for data transfer or API calls. Our per-second billing ensures you only pay for exactly what you use, with no minimums or rounding up to the nearest hour or minute. What you see is what you pay.

What makes your infrastructure different?

Our entire stack is built specifically for AI workloads with optimized networking, storage, and software configurations. We use premium NVIDIA GPUs with specialized driver tuning and framework optimizations that deliver significantly better throughput than standard cloud deployments.

How does committed pricing work?

Our 12-month committed plans offer substantial discounts (up to 35%) with guaranteed resource availability, priority access to newer hardware, and enhanced support response times. You maintain the same flexible usage patterns but at significantly reduced rates.

Ready to Experience Enterprise-Grade GPU Infrastructure?

Get in touch with our team to discuss your specific AI infrastructure needs, receive a personalized quote, or schedule a performance benchmark demonstration.

Why Leading Organizations Choose VYROX AI

We provide the essential combination of cutting-edge hardware, optimized software, and expert support that enables your organization to successfully implement AI and high-performance computing solutions.

Instant Deployments

Get your AI models up and running in minutes with our streamlined deployment process. No more waiting for hardware provisioning or complex setup procedures.

Pay-As-You-Go Pricing

Only pay for the GPU resources you actually use with our flexible pricing model. Scale up during high demand and scale down when you don't need the extra capacity.

Global API Endpoints

Serve your models from edge locations around the world with our distributed inference network. Reduce latency for your users no matter where they are located.

Private Model Hosting

Keep your trained models and training data secure with our isolated infrastructure. We ensure your intellectual property remains protected while maintaining high performance.

Optimization Services

Our team of ML engineers can help optimize your models for production with quantization, distillation, and throughput optimizations that reduce your hosting costs.

Developer-First API

Our developer-first APIs achieve 99.9% uptime with just 1.2ms average response time. Comprehensive SDKs for Python, JavaScript, Java, and Go reduce integration time from weeks to hours, with most clients deploying to production within 3 days of onboarding. Our customers report 85% fewer support tickets compared to previous infrastructure providers.

What Our Clients Say

Organizations across various industries have accelerated their AI initiatives and achieved breakthrough results with our GPU infrastructure and expertise.

After migrating from a major cloud provider to VYROX AI, our financial model training times decreased by 83% while our infrastructure costs dropped by 42%. Their team's expertise helped us optimize our LLM pipeline, resulting in a 3.5x throughput improvement. This translated directly to a 27% increase in our trading platform's accuracy and a competitive edge that boosted our client acquisition by 31% year-over-year.

As a research institution, we needed reliable, high-performance computing resources for our molecular dynamics simulations. VYROX AI's infrastructure and support team have exceeded our expectations, enabling breakthrough discoveries.

Deploying our AI models in production was a challenge until we partnered with VYROX AI. Their infrastructure has proven to be reliable, secure, and scalable, allowing us to focus on innovation rather than operations.